The great potential of AI: Scaling wiki-work

At Wikimedia, AIs help us support quality control work, task routing, and other critical infrastructures for maintaining Wikipedia and other Wikimedia wikis. To make it easier to support wiki-work with AIs, we built and maintain ORES, an open AI service that provides several types of machine predictions to Wikimedia volunteers. For example, ORES’ damage detection system flags edits that appear (to a machine) to be sketchy, and this flag helps steer a real live human to review that edit, who will make sure it gets cleaned up if it is a problem. By highlighting edits that need review, we can reduce the overall reviewing workload of our volunteers by a factor of 10. This turns a 270 hours per day job into a 27 hours per day job. This also means that Wikipedia could grow by 10 times and our volunteers could keep up with the workload.

By deploying AIs that make Wikipedians’ work more efficient, we make it easier to grow—to keep Wikipedia’s doors open. If counter-vandalism or another type of maintenance work in Wikipedia were to overwhelm our capacity, that would threaten our ability to keep Wikipedia the free encyclopedia that anyone can edit. This is exactly what happened recently around one of the quality control activities in Wikipedia. Reviewing new articles for vandalism and notability is a huge burden in English Wikipedia. Over 1,600 new article creations need to be reviewed every day. The group of volunteers who review new articles couldn’t keep up, and in the face of a growing backlog, they decided to disable new article creation for new editors. We’re actively working on AIs that can help filter and route new page creations, to lessen the workload and to make it easier to reopening article creation to new editors.

Without a strategy for increasing the efficiency of review work, reopening article creation to new editors would just re-introduce the same burden.

Biases and diversity

But with all of the efficiency benefits that come with AI, we must be wary of problems. Humans have biases whether we choose to or not. When we train AIs to replicate human judgement, we can hope that at best, those AIs will only be biased in the same ways as their instructors. Worse, these and additional biases can appear in insidious ways because an AI is far more difficult to interrogate than a real live human being. Recently, the media and the research literature has been discussing ways in which bias creeps into AIs like ours. For example, Zeynep Tufecki has warned that “We are in a new age of machine intelligence, and we should all be a little scared.”[1] And when we first announced ORES to our editing community, our own Wnt urged us to “Please exercise extreme caution to avoid encoding racism or other biases into an AI scheme. […] My feeling is that editors should keep a healthy skepticism – this was a project meant to be written, and reviewed, by people.”[2] We agree. AI-reinforced biases have the potential to exacerbate already embattled diversity in Wikipedia—especially if they will be used to help our volunteers more efficiently reject contributions that don’t fit the mold of what is typically accepted.

In order to directly address these biases, we’re trying a strategy that may be novel but will be familiar to Wikipedians. We’re working to open up ORES, our AI service, to be publicly audited by our volunteers. When we first deployed ORES, we noticed that many of our volunteers began to make wiki pages specifically for tracking its mistakes (Wikidata, Italian Wikipedia, etc.). These mistake reports were essential for helping us recognize and mitigate issues that showed up in our AIs predictions. Interestingly, it was difficult for any individual to see any of the problematic trends. But when we worked together to record ORES’ mistakes in a central location, it became easier to see trends and address them. You can watch a presentation by one of our research scientists on some of the biases we discovered and how we mitigated the issues.

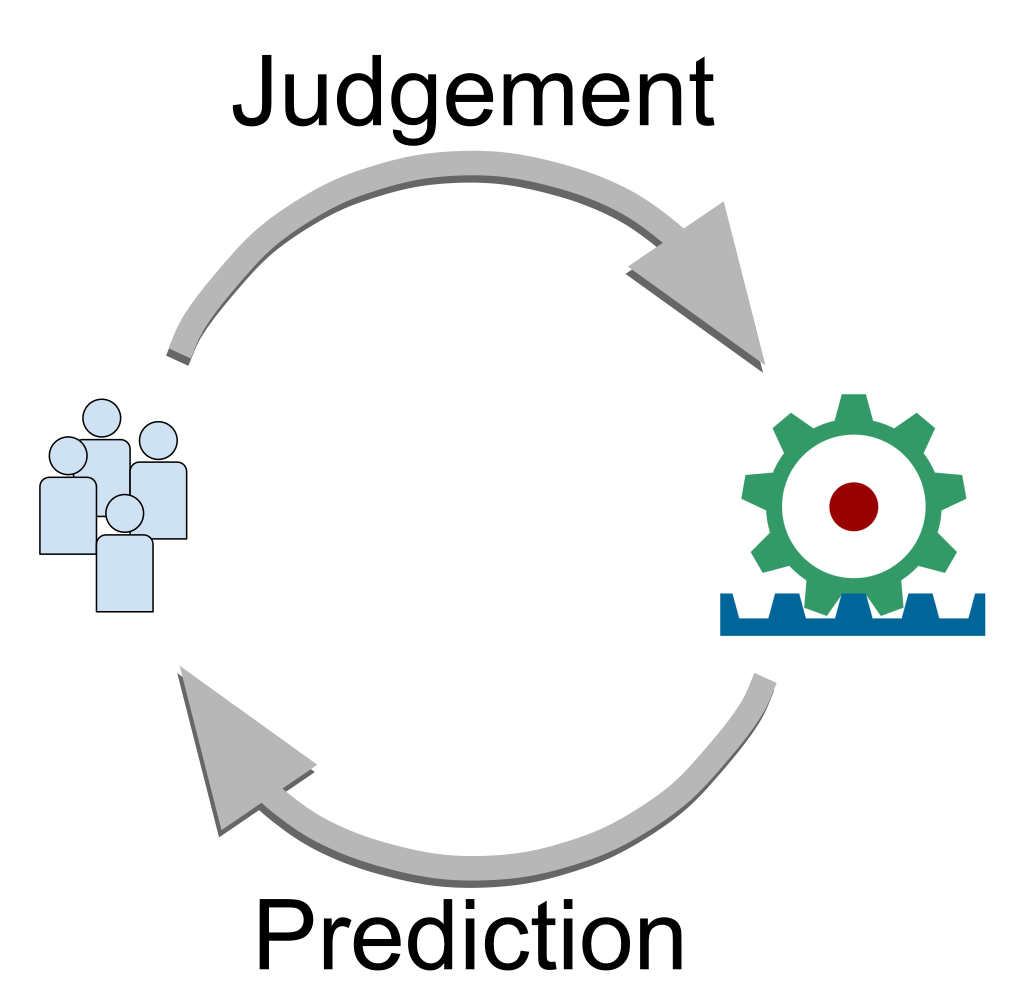

By observing how Wikipedians gathered reports, we were able to recognize a set of pain points that made the process of auditing ORES difficult. From that, we’ve begun to design JADE—the Judgement and Dialog Engine. JADE is intended to make the work of critiquing our AIs easier by providing standardized ways to agree or disagree with an AI’s judgement. We’re building in ways for our volunteers to discuss and refine their own examples for training, testing, and checking on ORES in ways that are just too difficult to do with wiki pages.

Open auditing and the future

We think we’re onto something here. We need ORES and other AIs in order to help our volunteers scale up their wiki-work—to build Wikipedia and other open knowledge projects efficiently and in a way that aligns with our values of open knowledge. JADE is intended to put more power into our volunteers’ hands, to help us make sure that we can take full advantage of AIs while efficiently detecting and addressing the biases and bugs that will inevitably appear. In this case, we’re hoping to lead by example. While some organizations responsible for managing online platforms actively prevent audits of their algorithms to protect their intellectual property (see the lawsuit by C. Sandvig et al.[3]), we’re preemptively opening up our AIs to public audits in the hope of making them better and more human.

Aaron Halfaker, Principal Research Scientist, Scoring Platform

Wikimedia Foundation

Footnotes

- Hope Reese, “It’s time to become aware of how machines ‘watch, judge, and nudge us,’ says Zeynep Tufekci,” Tech Republic, 29 September 2015, https://web.archive.org/web/20210125123005/http://www.techrepublic.com/article/its-time-to-become-aware-of-how-machines-watch-judge-and-nudge-us-says-zeynep-tufekci/.

- EpochFail, とある白い猫, and He7d3r, “Revision scoring as a service,” The Signpost, 18 February 2018, https://en.wikipedia.org/wiki/Wikipedia:Wikipedia_Signpost/2015-02-18/Special_report.