A galloping overview

To start, let’s get a bird’s-eye view of the parts of the search process: text comes in and gets processed and stored in a database (called an index); a user submits a query; documents that match the query are retrieved from the index, ranked based on how well they match the query, and presented to the user. That sounds easy enough, but each step hides a wealth of detail. Today we’ll focus on part of the step where “text gets processed”—and look at tokenization.

Human v. computer: A pale imitation

Humans and computers have very different strengths, and what is easy to one can be incredibly hard for the other. A task like “sum all numbers up to 1,000 that are divisible by 3 or 5 but not both” is easy enough to get a computer to do, but tedious and error prone for a human. (My computer tells me the answer is 201,003, by the way, if you want to check your work.[1])

On the other hand, if I say, “I saw the bird flying over the mountains” you will probably assume the bird was flying above me. But if I say, “I saw the city flying over the mountains” you will probably assume that I was flying over the city (most likely in a plane). A computer could reasonably consider both interpretations for any sentence of the form “I saw the ____ flying over the mountains”. It’s possible—but very complicated—to get a computer to make a reasonable choice in this situation. Options include not deciding by giving up on trying to analyze the question so deeply (shallow parsing!), using statistics to choose the most common interpretation, using machine learning to choose mysteriously from among the options, or trying to encode some form of common sense and general information so that the computer knows that birds are more likely to fly than people, but people are more likely to fly than cities.[2]

The ease that humans have with language often hides most of the complexity, knowledge, and decision-making that goes into using words and giving them meaning. People generally aren’t even aware of how much they do to process language—at least not until they have to try to get a computer to do it.[3]

Since full-blown artificial intelligence that is able to read millions of pages of information and provide the answer to any question is not yet available to everyone, what do we do? We pretty much have to fake it ’til we make it by working really hard to get a pale imitation of what humans do with ease.

A token of my affection

The first step in processing a text, which may seem almost comically simple to a human, is “tokenization”, which means to break the text up into “tokens”. These tokens are the items that we’ll store in our index and search for later to find relevant documents. In English and many other European languages and other languages that use the Latin or Cyrillic alphabets, tokenization is usually pretty much the same as breaking the text up into words, though there are still some details to attend to. You can’t just separate words by spaces when processing text.

You’ve also got to deal with punctuation. In the preceding paragraph, we have “text” and “text,” and “text.”, which we probably want to treat as being the same.[4] We also probably don’t want to create a token with an em dash in it, like “language—at”, even though that string occurs a couple of paragraphs back. But what about “language-specific” with a hyphen? Or text formatted for print, in which “lan- guage” occurs across a line break? Are “sportsball”, “sports-ball”, and “sports ball” all the same, or different? Do periods sometimes matter, as with “Cal” as in “Calvin” versus “Cal.” as in “California”? What about “NASA” and “N.A.S.A.”, and how does any decision you make there affect “a.m.” and “am”?

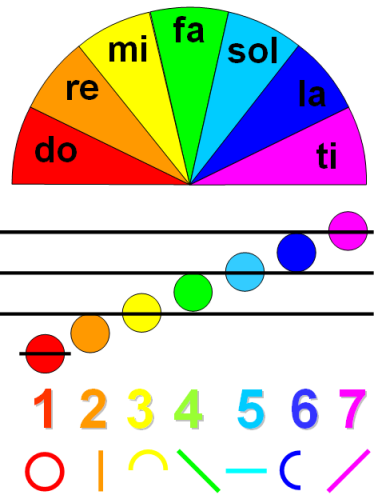

The fun with punctuation continues! You probably don’t want to split words on apostrophes, in English at least, so breaking up don’t into “don” and “t” is a bad idea.[5] But what about words with punctuation at the margins? If I quote something with ‘single quotes’, we probably don’t want to treat ‘single as different from single, or quotes’ as different from quotes. And it’s likely that ’tis and ’twas (poetic contractions for “it is” and “it was”) are the same as tis and twas—unless you are discussing numbers in Scots or solfège. If you want to break up words_with_underscores, what do you do with “____” above, and is “____” meaningfully different from “___” or “_____”? What about forty underscores in a row?

Space, the final frontier

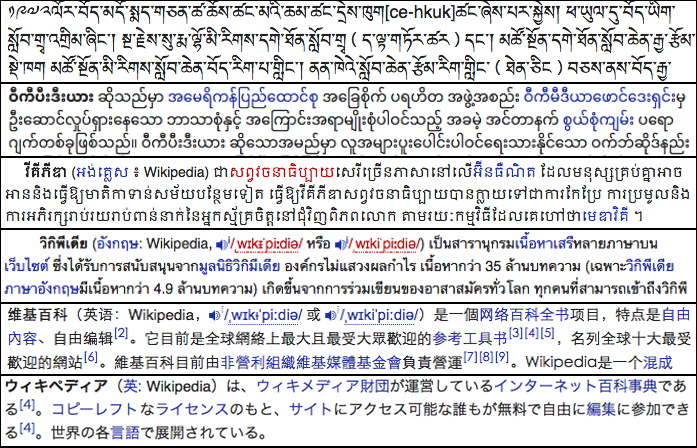

In other languages, the process of tokenization can be much harder. Some writing systems don’t use or don’t require spaces or other delimiters between words, like Canadian Aboriginal syllabics, Chinese hanzi, Japanese kanji and kana, Thai script, and others. Breaking up spaceless text into words in particular is called “word segmentation”.

There are several approaches to word segmentation. A common one is to have a large dictionary of known words, and at every step choose how to split up words based on some heuristic, such as picking the longest possible word or the most common word, or the set of words that are on average the most common. In that last approach, for example, segmenting a chunk of text into one very common word and one exceptionally rare word is not as good a choice as two fairly common words. Of course, dictionaries don’t work so well on unknown words and names, or misspelled words.

Heuristics can help, too. For example, words in English[6] are much more likely to end in -ies or -ng than to start with ies- or ng-. Of course, there are, for example, some Latvian words starting with ies- on English Wikipedia, like iesniegtie and iespējas, and the Vietnamese name Nguyen occurs in thousands of articles. Hence “heuristic” and not “rule”.

Machine learning approaches can combine information from dictionaries and heuristics, lots of other evidence and experience, and a bit of statistical magic to come up with a system that generalizes to previously unknown input, though when it goes wrong, it can go spectacularly wrong. Whether that’s hilarious or a disaster depends on your use case.[7]

An alternative to word segmentation, when the process is too difficult or too expensive, is to break text up into overlapping n-grams, which are sequences of n characters. Breaking English text into trigrams (n=3) would turn “a token of my affection” into this sequence of tokens: “a t”, “ to”, “tok”, “oke”, “ken”, “en ”, “n o”, “ of”, “of ”, “f m”, “ my”, “my ”, “y a”, “ af”, “aff”, “ffe”, “fec”, “ect”, “cti”, “tio”, “ion”. That’s not a great way to search for something, but it’s better than nothing. Sizing the n-grams is also more an art than a science. Make them too short—say, just one character—and you match almost anything. Too long—like ten characters—and the only thing you are likely to get as a search result is the exact phrase you searched for.

What to do? What to do?

There often aren’t any good, straightforward answers to these concerns—at least not at the level of simple rules you can apply just by looking at a text in any language, out of context, and without any common sense understanding about the language being processed. A common compromise, though, is to find exactly that kind of simple rule that works most of the time and put up with the less common cases where it doesn’t work—at least until you can’t stand them any more.

A frequent enough mode of failure is what I will call a lack of textual imagination—which can happen to any kind of text processing. Any algorithm developed with a very narrow focus on one language or writing system can do silly things, ranging from failing to recognize French «guillemets» or German „low“ opening quotation marks as punctuation to crashing the computer![8]

A lot of Wikimedia projects are quite likely to have text—words, names, even longer passages—that are in languages and writing systems other than the main language of the wiki. Most of the large Wikipedias and Wiktionaries have text in dozens of scripts, for example. So even an “English” tokenizer would have to do something at least semi-reasonable with Chinese and Japanese text, and it shouldn’t crash on Telugu. (See footnote [8].)

Simplicity is often good (or at least good enough, and cheap)—and robustness is required—but there’s almost no end to the complexity you can bring to bear on tokenization if you have the computational resources available to support it in a sufficiently timely fashion. Let’s look at some of the possibilities.

Now let’s do it in hard mode!

While I said that tokens are more-or-less the same as words in English, they don’ t have to be—as we saw with n-grams, which are often smaller than words. Tokens can be larger than words, too—such as general noun phrases or specific people, places, and things.

Using a part-of-speech tagger (or other more complicated parsing), it’s possible to identify noun phrases throughout a piece of text. Noun phrases usually refer to more specific things than do their component parts. In the introductory text above, we have the words “blog”, “engines”, “posts”, “projects”, “search”, and “Wikimedia”. It’s arguable that those are more useful as the phrases “blog posts”, “search engines”, and “Wikimedia projects”. As we’ll see in a later installment in this series, search results often rank higher documents where the search terms are closer together, so the benefit of such phrases may not outweigh the cost of identifying them.

Similar to finding phrases, it’s also possible to find “named entities”—people, places, or things mentioned by name. As with many language-related tasks, this often seems very easy for humans, but it can be quite challenging for computers. However, when it works, it can distinguish entities in a way that simpler searching cannot. For example searching for John Smith might return results for “Edward John Smith” (the captain of the Titanic)—after all, both words are right there next to each other! As humans, we know that’s probably not a great match, because the article about him is titled “Edward Smith”, indicating that the good captain probably was not known primarily by his middle name.

What’s on-wiki? What’s in the pipeline?

Tokenization is currently not entirely consistent across languages. Sometimes that makes sense—the tokenizer on English-language wikis just chops Chinese text into single characters, while the tokenizer on Chinese-language wikis attempts to do proper word segmentation. Other times, differences make less sense; some wikis break words on underscores, while others don’t. I hope to eventually look into these differences and try to make things more consistent across languages in cases where it seems appropriate.

Chinese-language wikis support both Traditional and Simplified characters—often used together in the same document—so we first convert everything to Simplified characters before doing word segmentation (the word segmenter we have only works on Simplified characters). We’ll talk more about this and other kinds of normalization in a future post.

I tested a Japanese word segmenter in the summer of 2017, but unfortunately it didn’t work well enough. As a result, Japanese-language wikis use a bigram tokenizer, which breaks up the text into overlapping tokens of two characters. Korean uses the same bigram tokenizer, while Thai has its own special Thai tokenizer (which I am not yet familiar with).

For quite a while I’ve been looking at improving support for various languages where I can find it or build it. The improvements to Chinese—using the Traditional-to-Simplified conversion before applying a word segmenter—came out of that work.

The Search Platform team is in the earliest stages of considering whether entity recognition is something we should pursue over the next year. It’s too early to say whether we will, but it offers a lot of interesting possibilities.

Further reading / homework

You can read more about the complexities of supporting Traditional and Simplified Chinese characters (and other multi-script languages) in an earlier blog post, “Confound it!”

You can delve much too deeply into the complexities of searching for names in another blog post: “Hello, my name is ________” (Look!—more of those pesky underscores!)

If you can’t wait for next time, I put together a poorly edited and mediocrely presented video in January of 2018, available on Commons, that covers the Bare-Bones Basics of Full-Text Search. It starts with no prerequisites, and covers tokenization and stemming, inverted indexes, basic boolean and proximity retrieval operations, TF/IDF and the vector space model of similarity, field-level indexing, using multiple indexes, and then touches on some of the elements of scoring.

Up next

In my next blog post, we’ll look a bit at text normalization but our main focus will be on stemming, which involves reducing a word to its base form, or a reasonable facsimile thereof.

Trey Jones, Senior Software Engineer, Search Platform

Wikimedia Foundation

Footnotes

1. If you like weird number patterns, here’s a fun one. The sum all numbers up to 100 that are divisible by 3 or 5 but not both is 2103. Up to 1,000, it’s 201003. Up to 10,000, it’s 20010003. Up to 100,000: 2000100003. Up to 1,000,000: 200001000003. This is another great example of computers and humans being good at different things. The computer would never have noticed the pattern (or even have tried to look for it), but I never would have been able to find it if I had to do the sums manually.

2. In the right context, a city could be flying over the mountains. In a science fiction or superhero movie, for example. The Avengers had to deal with a flying city a while back.

3. Though it will smack you in the face if you try to learn a language that makes some distinction your native language does not, like grammatical gender, animacy, or evidentiality.

4. This kind of thing is why some linguists and computer scientists have developed an annoying habit of almost always putting their punctuation outside of quotes. Orthographic conventions should not take precedence over accuracy!

5. On the other hand, accurate delineation of a string with quotes can also be confusing if the string has quotes or apostrophes in it, in which case, italics to the rescue!

6. While we generally don’t need word segmentation in English, it sure is convenient for examples!

7. An example that works at the time I’m writing this (but may change in the distant future—like next week) Is translating “山廿凡十 十廿巨 廿巨亡片” from Chinese to English using Google Translate, which uses neural networks and machine learning. The phrase isn’t actually Chinese; the characters are chosen to look like “WHAT THE HECK” in English. Translate it with Google—then add an exclamation point to it. Then add another one. And another one. No rules-based or heuristic approach would change seemingly at random like that. Of course, on real Chinese text, Google Translate usually works fairly well.

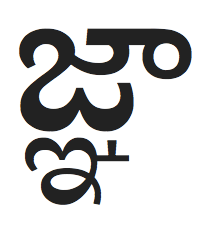

8. No, really! In February of 2018, it was reported that Telugu characters could crash Apple apps and devices. You can throw caution to the wind and read the English Wikipedia article about it, which contains the “killer characters”.