A galloping overview

As we have done before, let’s get a bird’s-eye view of the parts of the search process: text comes in and gets processed and stored in a database (called an index); a user submits a query; documents that match the query are retrieved from the index, ranked based on how well they match the query, and presented to the user. That sounds easy enough, but each step hides a wealth of detail. Today we’ll focus on the step where “text is stored in an index”.

Also keep in mind that, as discussed in the first installment in this series, humans and computers have very different strengths, and what is easy for one can be incredibly hard for the other.

A place for my stuff

In previous installments, we’ve looked at how we break text into tokens (which are approximately words,[1] in English), normalize them (by converting to lowercase, removing diacritics, and more), removing stop words, and stemming the remainder (converting them to their root forms). Now that we have our normalized stemmed words, where do we put them? In an inverted index!

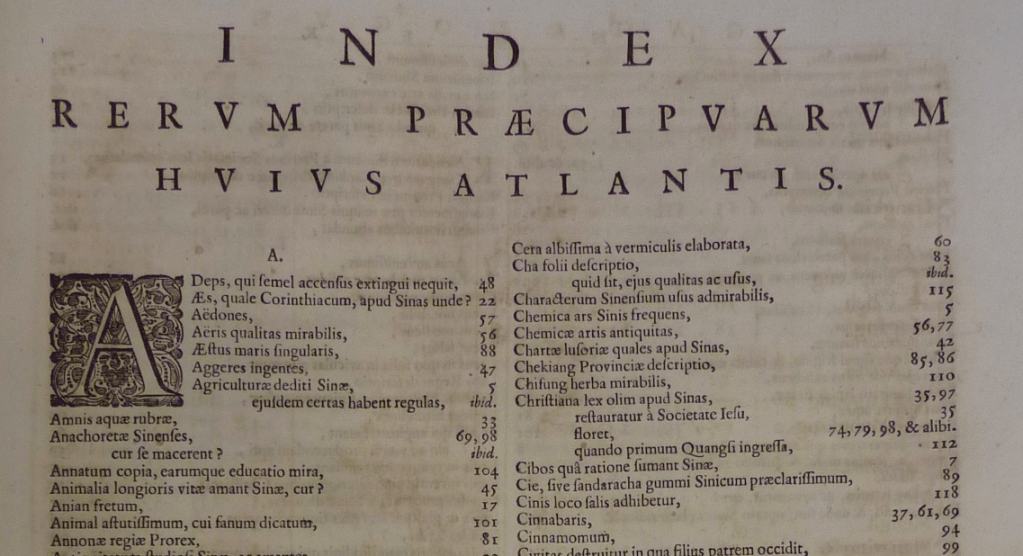

An inverted index is a more complex, database-like version of the kind of index you find in the back of a book or—as more mature readers may recall using at some point—a card catalog. At a minimum, we want to record where each word occurred, so we can find it again easily.

Depending on the search features you want to support, you might just record the document ID[2] for each occurrence of a word, though typically the word number (roughly, “it’s the 354th word”) is recorded for more complex searching, and the character offset (“it spans characters 93 through 98”) is also recorded to facilitate, for example, highlighting in search results.

Let’s take a look at how this works with a couple of sample documents.

- Document 1: “A dog chased the cats.”

- Document 2: “The cat chases the mice.”

After tokenizing, normalizing (here, just lowercasing), removing stop words (a, and the), and stemming (chased/chases → chase, cats → cat, mice → mouse), we could have the following inverted index, where “D1:W2” means “in document #1, this is word #2”:

- cat: D1:W5, D2:W2

- chase: D1:W3, D2:W3

- dog: D1:W2

- mouse: D2:W5

The words in the index are not necessarily stored in alphabetical order as shown here, or like the index in the back of a book would be, but often use more complex data structures to maximize lookup speed. Really, that’s pretty much it for the basics of building an index, because all the hard work was figuring out what words to put in the index—by tokenizing, normalizing, dropping stop words, stemming, etc.

I want more, more, more

As mentioned above, we might want to record more information than just the document ID and word position. Character offset—e.g., dog occupies the 3rd through 5th characters[4] of Document 1 above—can be used for highlighting search terms in text snippets. Character offsets are especially useful when your original word may not be the exact word that was indexed, as is the case with stemmed words and thesaurus terms. For example, our mini index above says that cat is word 5 in document 1, but the actual word in the original text is cats, so knowing its offset (18–21) makes it easier to highlight. Also, if you have indexed lawyer when your original word was attorney, you are really going to need those offsets to know where it occurred in the original text.

An index can also keep track of additional positional information, like which sentence, paragraph, or section a word was found in, which could be useful for scoring, though nowadays search engines often don’t bother with sentence- or paragraph-level tracking, and as a result you will sometimes see the last word of one sentence and the first word of the next highlighted.[5] That doesn’t usually indicate the same quality of match as two adjacent words in the same sentence, but it’s a shortcut that works well enough most of the time. Search on Wikipedia does, however, give extra weight during scoring to matches found in the title or in the opening text of an article.

Depending on the use cases you want or need to support there are several ways of keeping track of these different kinds of information. You could annotate a word in the index to indicate that it’s a stemmed word or thesaurus word (and not the original word), which might then be used during scoring and ranking. You could also annotate structural information—like the fact that a word came from the title of an article—or you could incorporate that information into the term itself, indexing something analogous to title:dog to keep track of instances of dog that appear in titles. Another option would be to have a completely separate index just for titles. As with any software, different use cases can point toward different architectures. A title-only index is going to be smaller and may return results faster, but it might also make it harder to combine title-based results with results from another index that contains everything but the title.

Despite the potential complications, it is often worthwhile to have multiple indexes because they can allow you to search in very different ways.

Wisdom of the crowd

One of the problems with stop words is that, albeit rarely, important phrases can be made up entirely of stop words, like the opening words of Hamlet’s famous soliloquy: “To be, or not to be”. It’s unusual for words that usually carry little specific meaning to end up being so weighty!

For on-wiki search we solve the stop word problem by having two indexes, which we call “text” and “plain”. All of the processing we’ve talked about so far is applied to the text field, while the plain field only undergoes tokenization and normalization—no stemming is applied and no stop words are removed—so to be or not to be is readily found in the plain index.

In the more typical case, we combine the scoring results from matches in the text field and matches in the plain field to rank the final results. So while the searches hope and change, and hoping changing—both of which are reduced to hope change in the text field—get the same number of results on English Wikipedia (85,736 at the time of writing), the ordering of the results is different. “Hope and Change” is a redirect to the article on the “Barack Obama 2008 presidential campaign”, so matching that exact phrasing pulls it to the top of the results. The song “Everybody’s Changing” (from the album Hopes and Fears) jumps from somewhere between #1400 and #1500 for hope and change to #2 for hoping changing, in large part based on the exact match to one of the words in the title.

We have other indexes that support search for other forms of the text found in the various wikis. The keyword insource: searches the raw wikitext version of an article, which allows you to match not only markup (like template names and specific tags) but also “hidden” text—like text in links or comments—that a reader doesn’t normally see, but which an editor might find quite interesting. The keyword intitle: is similar, but only applies to titles and redirects. Both intitle: and insource: support regular expression searches, too, which use specialty indexes that allow you to search for really complex patterns.[6] There are keywords that search indexes for category tags (incategory:) and templates (hastemplate:) found in each article as well.

Of course there are also indexes for different collections of documents (as opposed to multiple indexes of the same documents), such as for each namespace (User, Talk, Help, etc., etc.)

Further reading / Homework

If you can’t wait for next time, I put together a video back in January of 2018, available on Commons, that covers the Bare-Bones Basics of Full-Text Search. It starts with no prerequisites, and covers tokenization and stemming, inverted indexes, basic boolean and proximity retrieval operations, TF/IDF and the vector space model of similarity, field-level indexing, using multiple indexes, and then touches on some of the elements of scoring.

Up next

In my next blog post, we’ll look at query processing and basic boolean retrieval operations.

Trey Jones, Senior Software Engineer, Search Platform

Wikimedia Foundation

• • •

Footnotes

1. In most texts in English and other European languages, most tokens are words, and most words are tokens, so they two terms get used interchangeably. I tend to use “words” rather than “tokens” because the term is more familiar to most readers, but in the context of searching and indexing, I really mean “tokens”—see the blog post “A token of my affection” for more details on tokenization and why it’s harder than it looks.

2. Document IDs are often just numbers, assigned as the documents are added to the index. Wikipedia and other wiki projects work that way, so the ID for the article on inverted indexes is 3125116. Using increasing numbers is convenient because the IDs are guaranteed to be unique. Titles or any other attribute may not be guaranteed unique—Wikipedia article titles happen to be unique, but in another data set—like general web pages—they need not be. Using increasing numbers also means that some numbers may be unassigned, because the corresponding document has been deleted. So, English Wikipedia doesn’t have an article with ID #1. At the moment, the smallest ID still in use is 10, for AccessibleComputing,[3] which is now a redirect to Computer accessibility.

3. Note that “AccessibleComputing” is in CamelCase, which was used in the very early days of wikis to indicate links to other topics. Thanks to the fact that we still have the complete edit history of the AccessibleComputing page, we can see the first version of it, which does seem to have used CamelCase for links, including “LegalIssuesInAccessibleComputing”—which makes it clear why CamelCase was ditched in favor of more explicit linking syntax.

4. Readers who are detail-oriented may have counted to make sure I got my numbers right. Readers who are programmers will complain that the correct span is “2nd through 4th” because counting starts at 0 in many programming languages. In fact, that may be what actually happens internally in some/many/most search engines[citation needed]; it’s what our underlying search engine, Elasticsearch, does.

The practice stems from the computational stone age—when computers were literally millions of times slower than today—and even “subtract one” was a moderately costly operation. Since the first item in a list is right there at the beginning of the array, you don’t need to do anything to get to it. To get to the second item, you just have to skip the first. To get to the 15th, you have to skip 14. Back in the old days, it was worth it to instead tell the computer that the beginning of the list starts at 0, so you skip 0 things to get to it, the next one is #1, and you skip 1 to get to it. To get to #14, you skip 14, etc. Today, it may seem kind of silly—but it’s tradition!

5. As an example, if you search for English Wikipedia for snails dominated, you might be hoping to find an article about a time where snails dominated something or other. Instead you get this snippet as the top result: “in the markets and its surrounding streets, from car parts to land snails. Dominated by women traders, the market sells fresh produce, manufactured and”. To be fair, I had to go looking for an example that would behave like this, but they do crop up from time to time in the wild, on-wiki and in other search engines.

6. The details of regular expressions and regular expression searching are a bit beyond the scope of this blog post, but if you are familiar with the basics of regular expressions, the specialty index we use is pretty neat! For really complex patterns, there’s nothing to do but skim through all the text looking for spans of text that match the pattern. This is very inefficient and on big wikis like the larger Wikipedias, it will time out and not return full results. One trick we use is a trigram index, which tokenizes documents into overlapping three-character sequences and indexes those. We parse regex queries and try to find trigrams in them that we can use to limit the scope of what has to be inefficiently scanned. For example, descendant and descendent are both relatively common, so you might try to search for terms related to both on Wiktionary with the query intitle:/descend[ae]nts/. The search engine first finds documents that have all of these trigrams in the title: des, esc, sce, cen, end, and nts, and then scans just those for the full pattern—it’s a lot less work that way!

Of course, regexes over titles is faster and easier than regexes over the full text of Wikipedia articles, just because there is so much less text to scan when limited to titles. And the trigram trick can’t help you if you use a crazy regex like /[Ѐ-ԯA-Za-zÀ-ɏɐ-ʯ]*([Ѐ-ԯ][A-Za-zÀ-ɏɐ-ʯ]|[A-Za-zÀ-ɏɐ-ʯ][Ѐ-ԯ])[Ѐ-ԯA-Za-zÀ-ɏɐ-ʯ]*/, which I sometimes do in my volunteer capacity, to find and fix errant Latin/Cyrillic homoglyphs. Everybody needs a hobby.